Cumulative vs unfolding IRT models : How practice may defy theory

Abstract

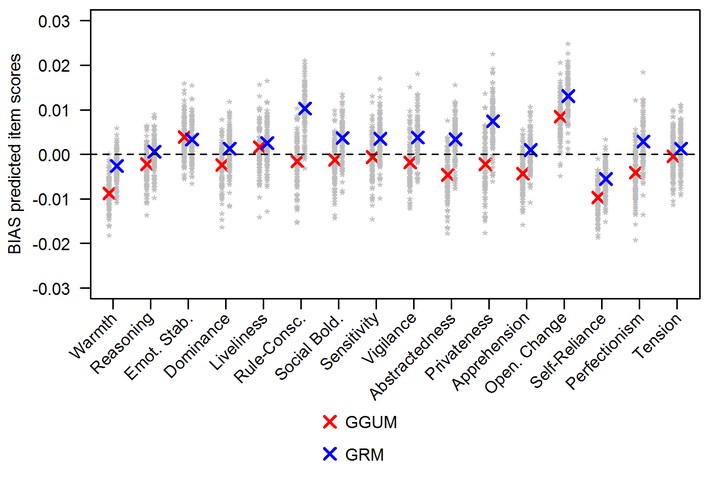

Item response theory (IRT) models are popular statistical tools that allow extracting psychometric information from data. These models are useful in various settings, including educational, psychological, and clinical assessment. However, the quality of the results crucially depends on properly fitting the model of interest to the data. Hence, it is important to assess which model best fits the data at hand. Two important classes of IRT models exist, namely the so-called cumulative and unfolding models (e.g., Drasgow et al., 2010). The former class is especially suitable in cognitive assessment, whereas the latter class is ideally conceived when measuring attitudes and preferences. The previous observation is mostly theoretical and, surprisingly, has had little exploratory attention using empirical data. This study provides a relevant empirical contribution to improve the current state of affairs. Our purpose was to see how well does the most popular unfolding model in use (the GGUM; Roberts et al., 2000) fare against the more classical polytomous IRT cumulative models (e.g., the graded response model; Embretson & Reise, 2000). We fit both cumulative and unfolding models to several empirical datasets (from cognitive and psychological settings). Results showed that not always the most obvious model choice was the one that fit the data best. The most important implication is that practitioners need to be very careful when selecting the model to use, in spite of the apparent structure of the data. Some constraints in our study (e.g., how to deal with unscalability of items) will also be addressed.